Feature: Run Google's Gemma 3 LLM Anywhere with Muna and Llama.cpp

We're launching experimental support for text generation with LLMs.

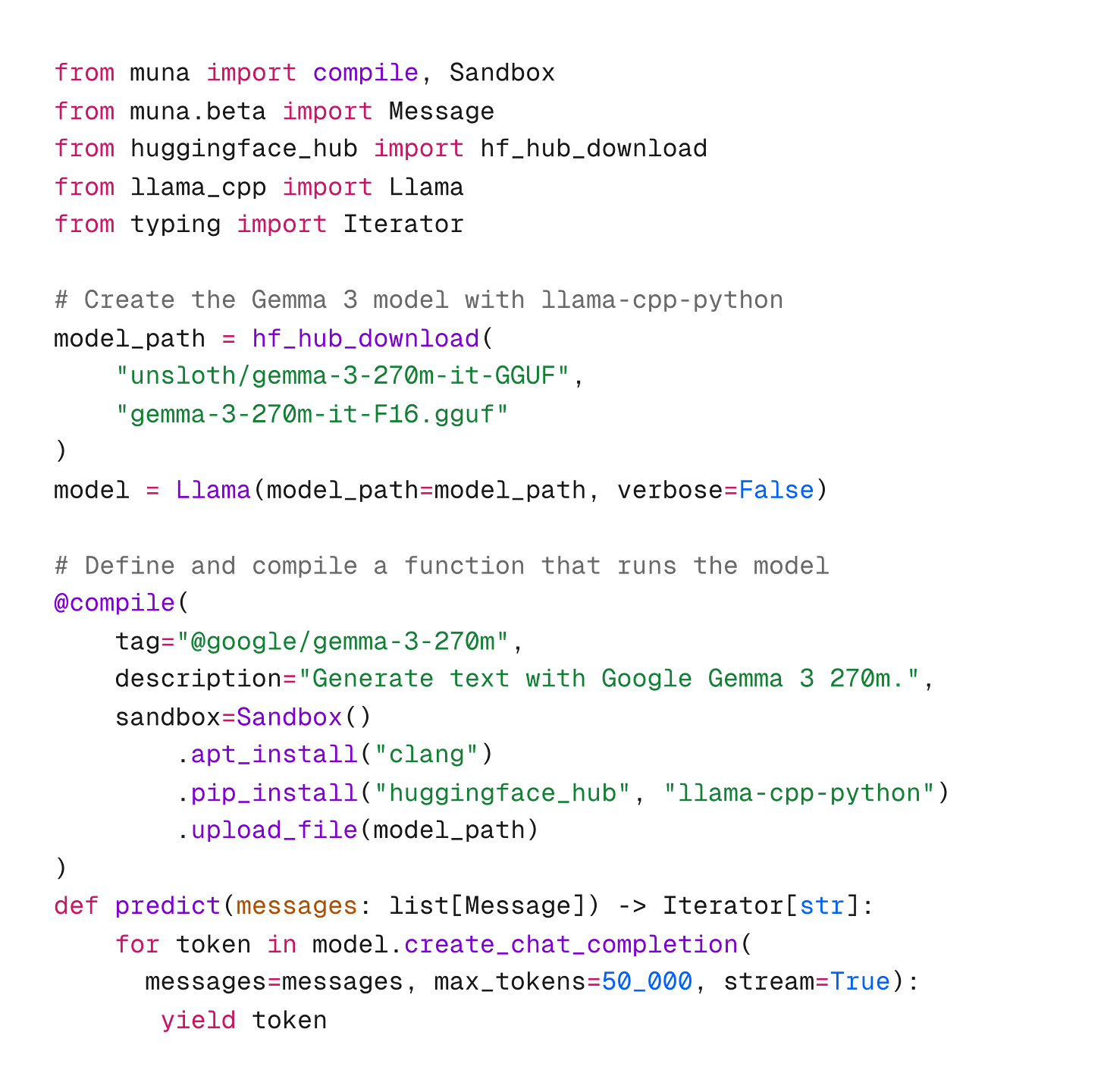

Over the past few weeks, we’ve been working to add support for LLM inference to Muna. Muna allows you to write Python functions, and compile them to run anywhere in as little as two lines of code:

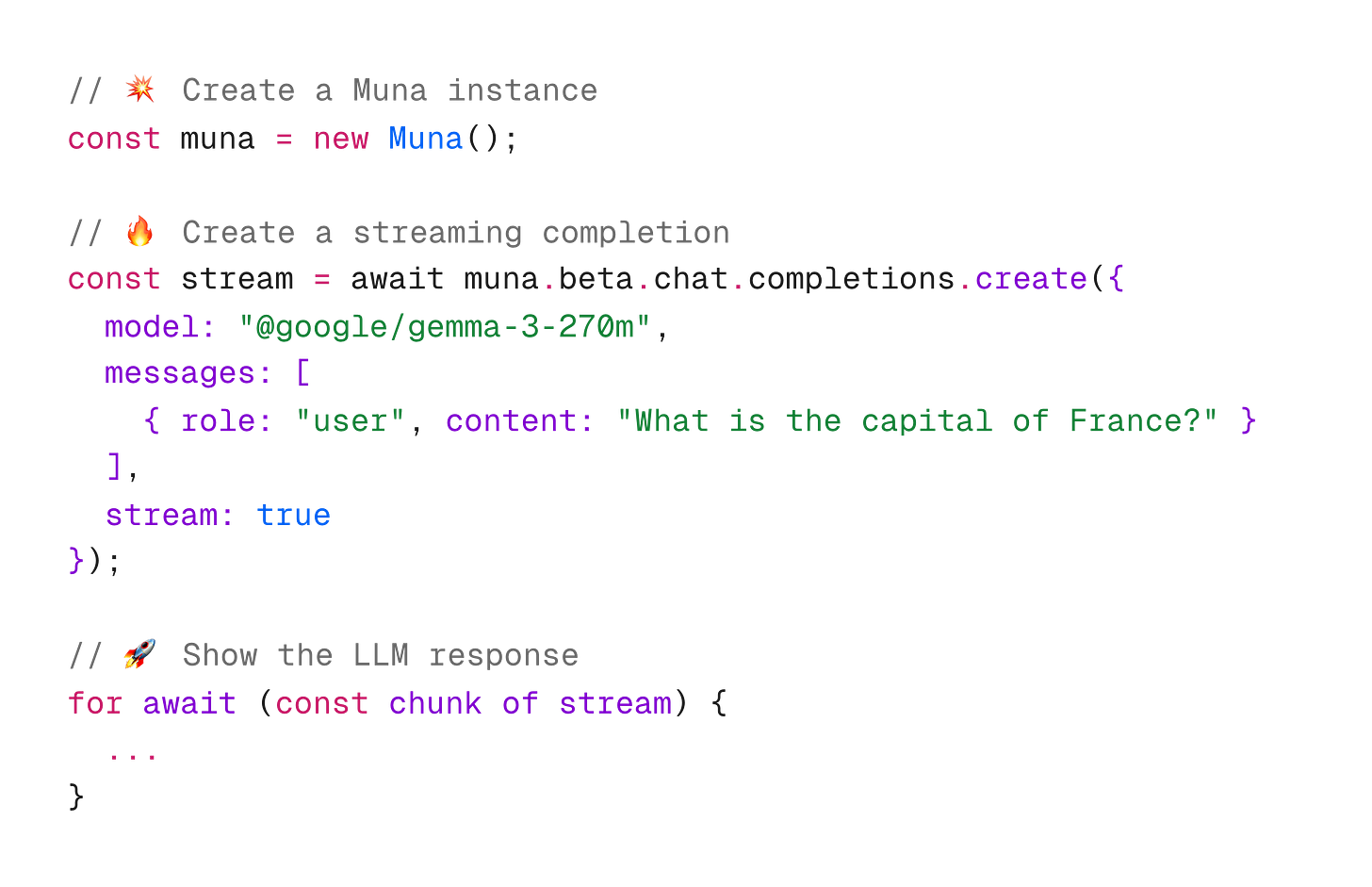

And since everyone is used to calling openai.chat.completions.create(…) in their code, we decided to implement a similar, experimental API in our client libraries:

We’ve got this implemented in our Python, JavaScript (browser + Node.js), and Android client libraries, with the same API coming in our Swift, React Native, and Unity Engine client libraries. Come talk to us about it in our Slack community.

Try out this demo project, which runs Google’s Gemma 3 270M using the llama-cpp-python library in Python; along with a Next.js web app that runs the compiled LLM locally in the browser.

Happy AI-ing.