Run Embedding Gemma Anywhere with Muna's OpenAI Client

From OpenAI to open-source embedding models in two lines of code.

Today, Muna supports running Google’s Embedding Gemma text embedding model locally and in the cloud—by changing only one line of code.

Embedding Gemma has shown very strong performance across a range of tasks, including question answering, clustering, and summarization. Along with it, we are launching our OpenAI client in our Python and JavaScript libraries.

From OpenAI to Open Source

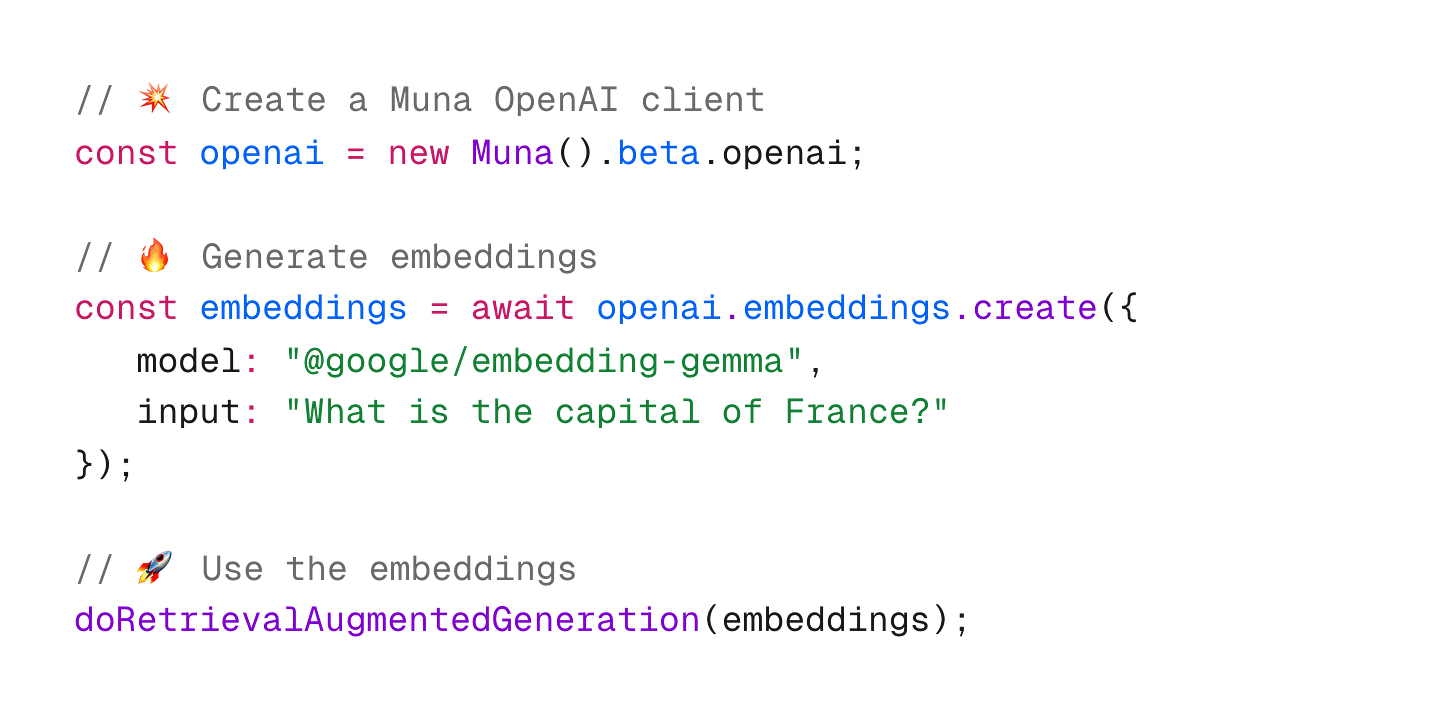

While designing an interface for developers to use Embedding Gemma, we were faced with a stark reality: the overwhelming majority of developers use text embedding models via OpenAI’s client libraries.

To make the transition as smooth and painless as possible, we are shipping an OpenAI client in our SDKs that will allow engineering teams run open-source text embedding models with almost the exact same code.

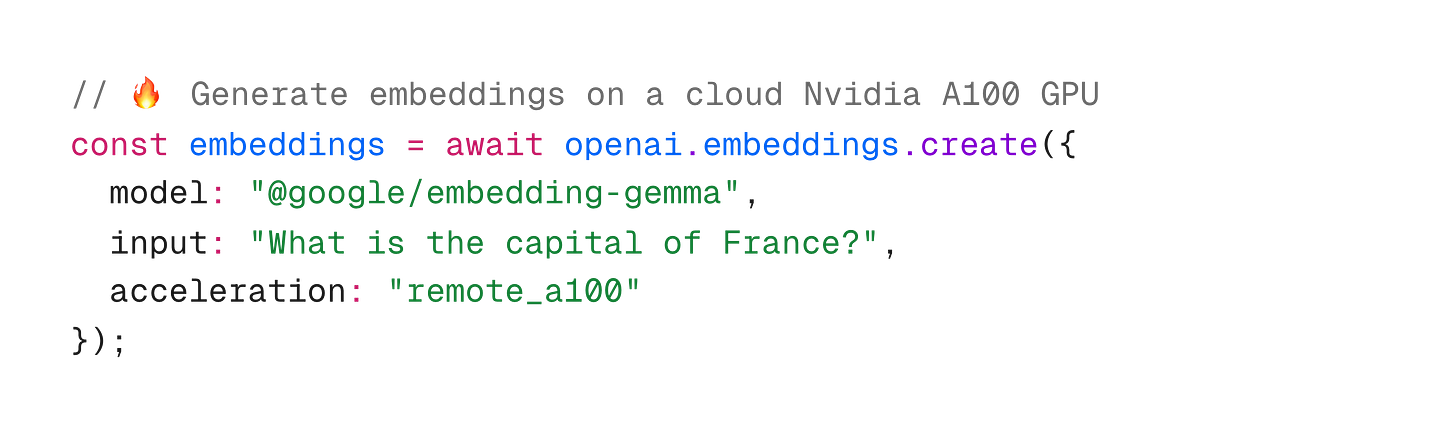

Thanks to Muna’s compiler technology, the embedding models will be downloaded and run locally by default. That said, developers can run the models on cloud GPUs with only one additional line of code:

If you already have compute resources (VPC, bare metal), reach out to us for how to run your compiled embedding models on your own infrastructure.

Bring Your Own Embedding Model

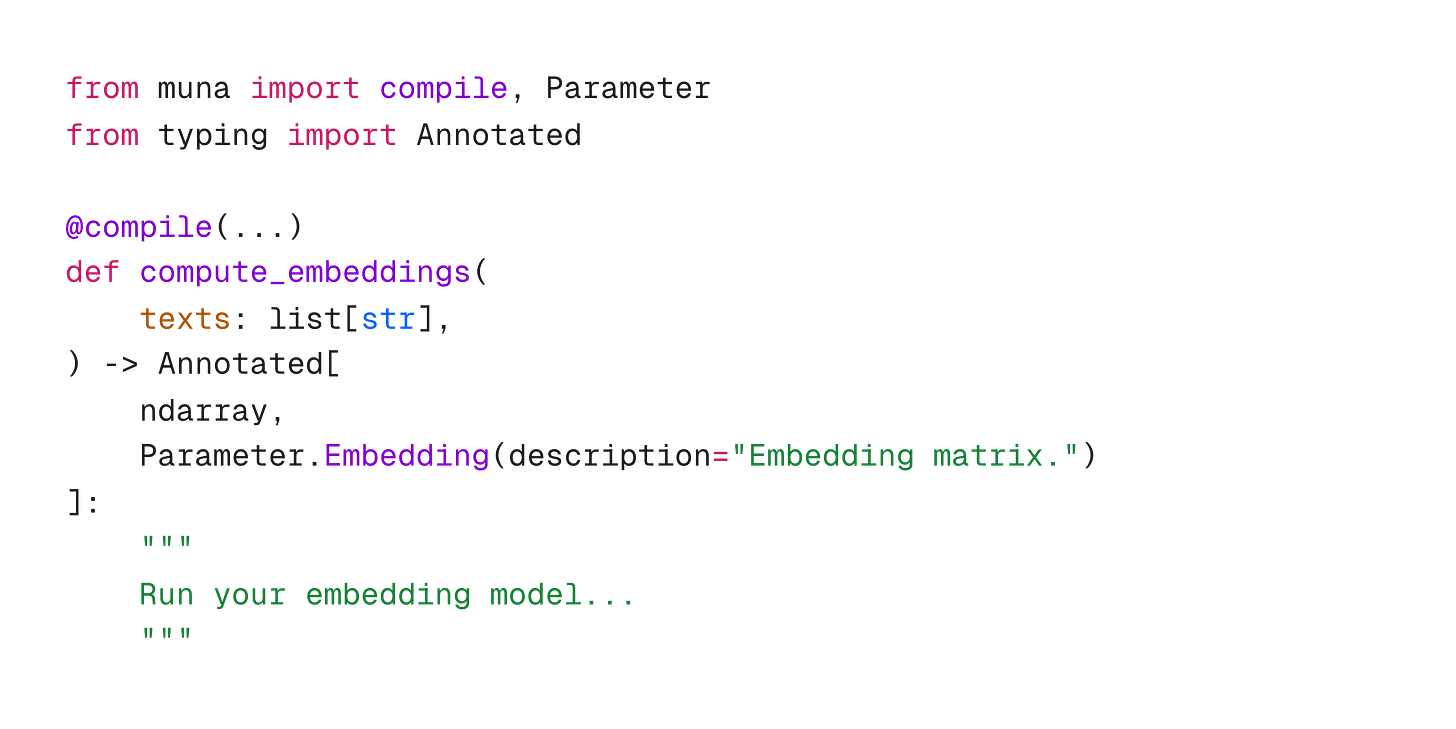

You can compile any arbitrary Python function to be compatible with our OpenAI client’s embedding interface, with just some type hints:

With this, engineering teams can compile their own custom or fine-tuned models with Muna, and run them anywhere. The Embedding Gemma model itself was compiled with under 100 lines of Python code.

If you build something fun with this, tag us—we’ll feature it in subsequent blog posts. And if you have any questions,