Model: Detect Objects in Python, Mobile, and Web with RT-DETR

Compiling RT-DETR to run anywhere in only 150 lines of code.

Realtime Detection Transformer is a leading object detection model, beating YOLO on object detection tasks thanks to its transformer architecture. Normally to deploy this model, developers must first export the model weights from PyTorch to a chosen inference format (e.g. ONNX, TensorRT), then write C++ code to run the model in their target environment.

Compiling the Model with Muna

Muna completely automates the process of writing deployment-ready C++ code for model inference. First, we wrote a Python function that takes an image, runs the model, and returns a set of Detection objects. All of this fit neatly into just 150 lines of Python code. From there, we compiled the function1 with Muna:

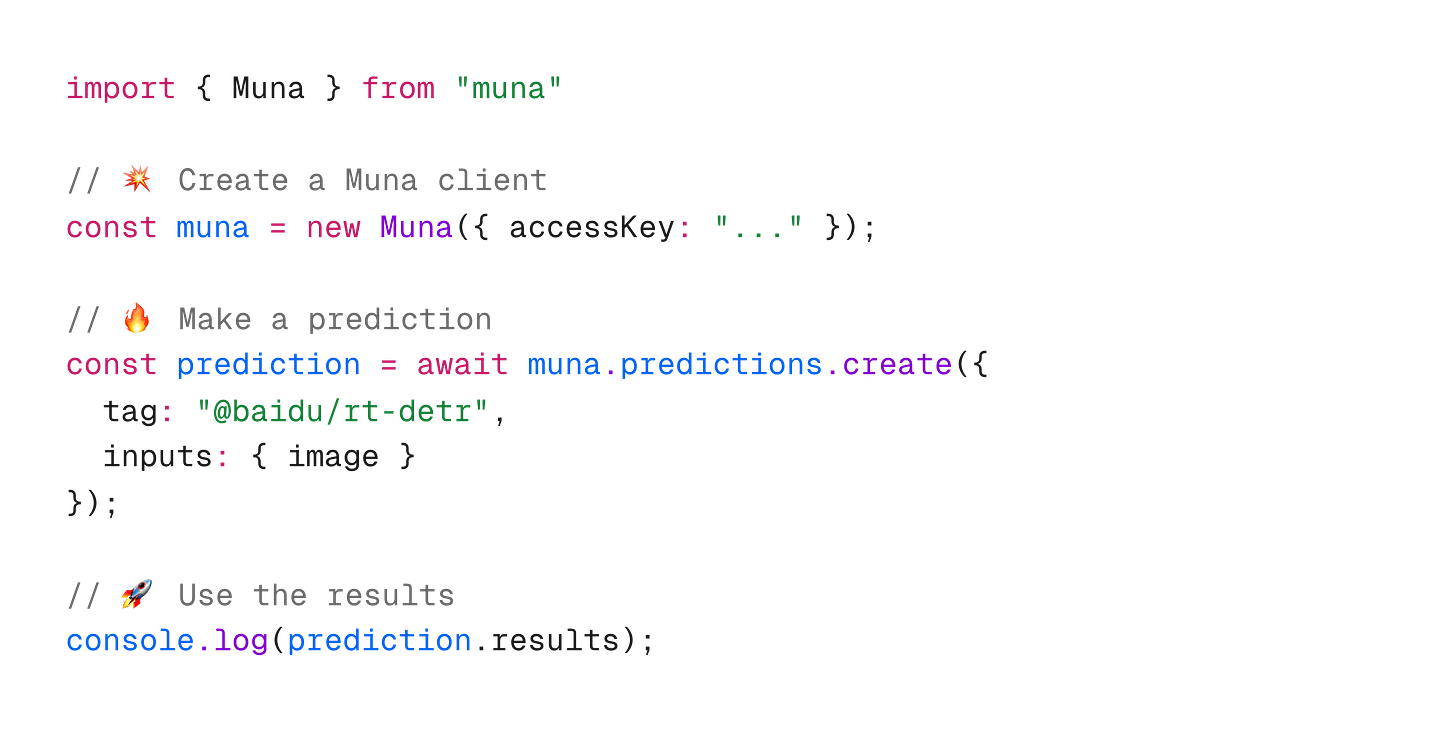

Once compiled, anyone can run the model from Python, JavaScript (browser and Node.js), Swift (iOS), Kotlin (Android), and so on. Here’s an example showing how to run the model in JavaScript (generate an access key for free here):

At runtime, the compiled model will get downloaded from Muna, loaded into your application, and then invoked with your input image. Don’t take our word for it, try the online demo yourself!

If you have any questions, come bother us on our community Slack. Happy coding!

We compiled the model to use ONNXRuntime, but you can use CoreML, TensorRT, TensorFlow Lite, OpenVINO, QNN, ExecuTorch, and more. See more on our docs.